By Amar Rao

Creating an exam for complicated technologies is tricky business. Doing it right takes time. It took 12 months of work to create and launch the Cloud Foundry Certified Developer (CFCD) exam. This blog is about that journey.

The exam is meant for developers to demonstrate proficiency in developing cloud-native applications on the Cloud Foundry platform and can be taken anyplace, any time. It is deployed on The Linux Foundation cloud-based exam infrastructure, leveraging the latest tools available. The actual environment through which candidates will access the Cloud Foundry instance is hosted on Swisscom. This project was developed as a collaborative team effort that included Cloud Foundry community subject matter experts (SME), the Linux Foundation and Biarca.

This certification exam is designed as a performance-based test in which the candidate’s proficiency is assessed not by testing knowledge of the subject, but by solving real world tasks encountered on the job. Problems are presented to the candidate that require the candidate to work in a “real” CF environment and deploy and manipulate application code.

Development began in June 2016, following a series of workshops facilitated by Wallace Judd, a psychometrician from Authentic Testing in which Cloud Foundry SME from leading global organizations were assembled to formulate the exam questions with Steve Greenberg of Resilient Scale as a technical advisor and SME. Given that all participants live across the country and are involved in other full-time work, it was a challenge to coordinate this activity — but we worked together to make it happen.

Biarca created the automation for provisioning each question’s setup and configuration to provide the environment in which candidates answer the questions. In addition, an automated grading system had to be developed through which the candidates were assessed and graded. In parallel, Linux Foundation was responsible for doing the end-to-end integration of all the various systems to deploy the exams on their cloud-based exam platform.

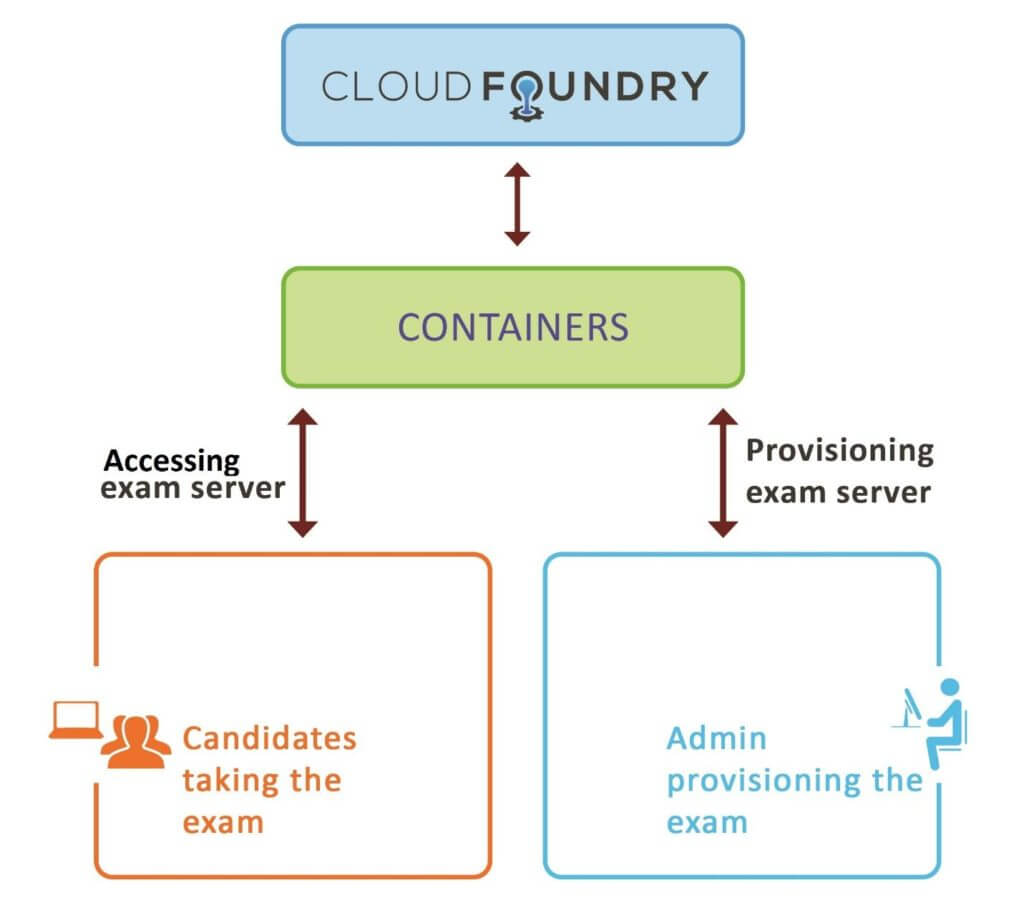

The Cloud Foundry examination process includes exam provisioning, proctoring, grading and result announcement. The exam architecture is shown in the image below:

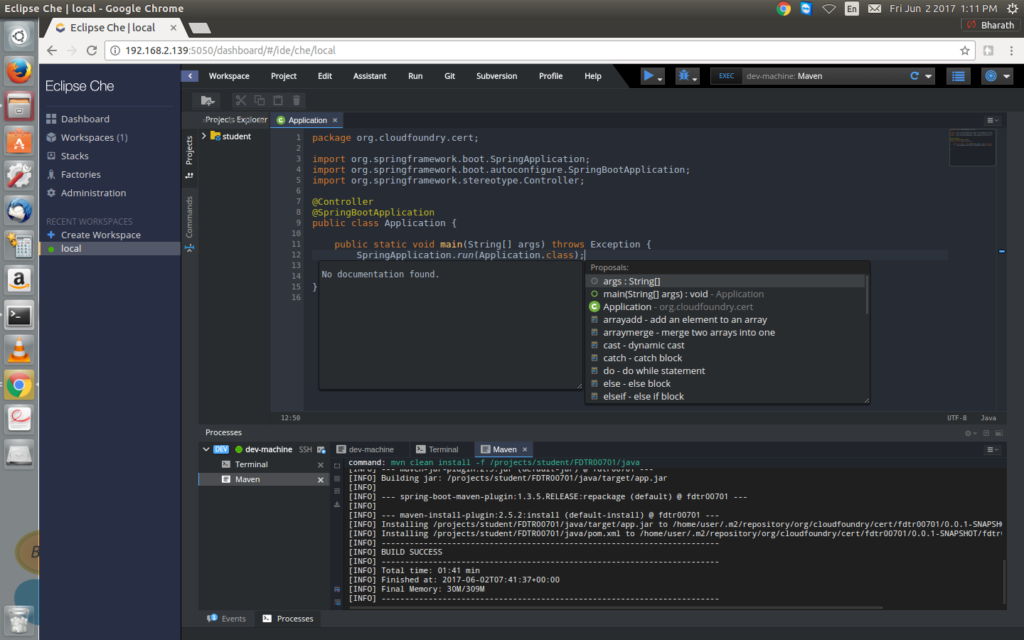

The decision was made to utilize Python in developing automation scripts for question provisioning, configuration and grading to ensure compatibility with the Linux Foundation exam platform. The Biarca team created a Python client library to address some of the unique requirements of administering the CF exam on the LF platform. One of the issues the team had to overcome included setting up Eclipse Che, a browser-based IDE interface, to make it more intuitive and closer to real-life environment for candidates. It was also a requirement to provide multi-language support so candidates can choose their language — be it Java, Node or Ruby. This had never been done before in a performance-based test, but the team rose to the challenge.

The development team also concentrated on providing an integrated experience for the candidate, designed to let the candidate focus on the question and to deploy the best answer possible without dealing with extraneous issues.

Another important part of the work was to provide a reference answer orchestration, which is required to verify accuracy of the exam setup provisioning and the grading process as a pre-check. Answer orchestration takes place before providing the exam setup to the candidate. The answer scripts are run to answer the individual exam items, and to ensure that the exam setup and grading is working as expected. Developers had to address challenges that included route collisions among the multiple applications being deployed by candidates as part of answering the exam.

The grading software is designed to assess not just the answer, but also the thought process leading up to it. The Cloud Foundry exam includes continuous interaction with a public cloud, and the software design had to consider network failures, non-availability of the cloud platform and more.

The grading scripts had to capture all the raw scores for each item answered by the candidate. These raw scores must then be adjusted to account for different weighting for different items based on complexity, etc. The culmination of this is the final grade, and the publishing of a Pass/Fail.

The development team wrapped up its work by December 2016. The months of January and February 2017 were focused on alpha testing the exam, followed by beta testing in March and April.

The exam is being formally launched at Cloud Foundry Summit Silicon Valley in Santa Clara today June 13.

This article originally appeared at Cloud Foundry.