While our updated Linux.com boasts a clean look and fresh interface for our users, there’s also an entirely new infrastructure stack that we’re happy to take you on a tour of. Linux.com serves over two million page views a month: providing news and technical articles as well as hosting a dynamic community of logged-in users in our forums and Q&A parts of our site.

The previous platform running Linux.com suffered from several scalability problems. Most significantly, it had no native ability to cache and re-serve the same pages to anonymous visitors, but beyond that, the underlying web application and custom code was also slow to generate each individual pageview.

The new Linux.com is built on Drupal, an open source content management platform (or web development framework, depending on your perspective). By default, Drupal serves content in a such a way as to ensure that pages served to anonymous users are general enough (not based on sessions or cookies), and have the correct flags in them (HTTP cache control headers), to allow Drupal to be placed below a stack of standards-compliant caches to improve the performance and reliability of both page content (for anonymous visitors) and static content like images (to all visitors including logged-in users).

The Drupal open-source ecosystem provides many modular components that can be assembled in different ways to build functionality. One advantage of reusable smaller modules is the combined development contributions of the many developers building sites with Drupal who use, reuse, and improve the same modules. While developers may appreciate more features, or fewer bugs in code refined by years of development, on the operations side this often translates into consistent use of performance best practices, like widespread use of application caching mechanisms and implementing extensible backends that can swap between basic configurations and high availability ones.

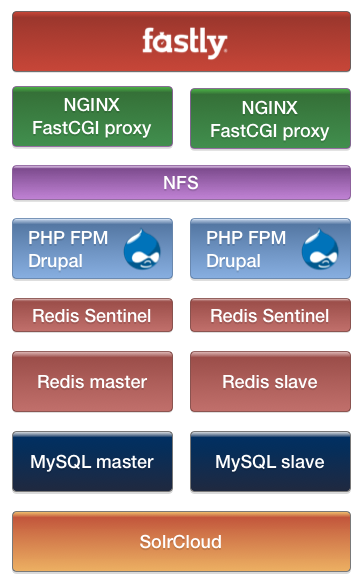

While Varnish provides a very powerful cache configuration language, Linux.com also uses another caching reverse proxy, NGINX, as an application load balancer in front of our FastCGI Drupal application servers. While NGINX is less flexible for advanced caching scenarios, it is also a full-featured web server. This allows us to use NGINX to re-serve some cached dynamic content from our origin to Fastly at the same time as serving the static content portions of our site (like uploads and aggregated CSS and JS, which are shared between NGINX and our PHP backends with NFS). We run two bare-metal NGINX load balancers to distribute this load, running Pacemaker to provide highly available virtual IPs. We also use separate bare-metal servers to horizontally scale out our Drupal application servers. These run the PHP FastCGI Process Manager. Our NGINX load balancers maintain a pool of FastCGI connections to all the application backends (that’s right, no Apache httpd is needed!).

We’re scaling out the default Drupal caching system by using Redis, which provides much faster key/value storage than storing the cache in a relational database. We have opted to use Redis in a master/slave replication configuration, with Redis Sentinel handling master failover and providing a configuration store that Drupal uses to query the current master. Each Drupal node has its own Redis Sentinel process for a near-instant master lookup. Of course, the cache isn’t designed to store everything, so we have separate database servers to store Linux.com’s data. These are in a fairly-typical MySQL replication setup, using slaves to scale out reads and for failover.

Finally, we’ve replaced the default Drupal search system with a search index powered by SolrCloud: multiple Solr servers in replication, with cluster services provided by ZooKeeper. We’re using Drupal SearchAPI with the Solr backend module, which is pointing to an NGINX HTTP reverse proxy that load balances the Solr servers.

I hope you’ve enjoyed this tour and that it sparks some ideas for your own infrastructure projects. I’m proud of the engineering that went into assembling these—configuration nuances, tuning, testing, and myriad additional supporting services—but it’s also hard to do a project like this and not appreciate all the work done by the individual developers and companies who contribute to open source and have created incredible open source technologies. The next time the latest open source pro blog or technology news loads snappily on Linux.com, you can be grateful for this too!